How to record and convert videos for App Store Previews

I decided to evaluate the impact of “Previews” for App Store (traffic) optimization on Pictory. But creating a video takes time, especially if you plan to support half-a-dozen screen sizes and multiple languages! So, doing it manually could quickly become a complex chore, even more if you have to update them several times a year.

That’s why I chose to automatically create the previews with fastlane, and reuse my snapshot target to quickly generate the videos.

But, while snapshotting an iOS simulator automatically creates images with the proper dimensions (e.g. 1170x2532px for iPhone 12 Pro), doing so for videos requires more post-production work to be accepted by Apple. We’ll go over the Apple requirements too.

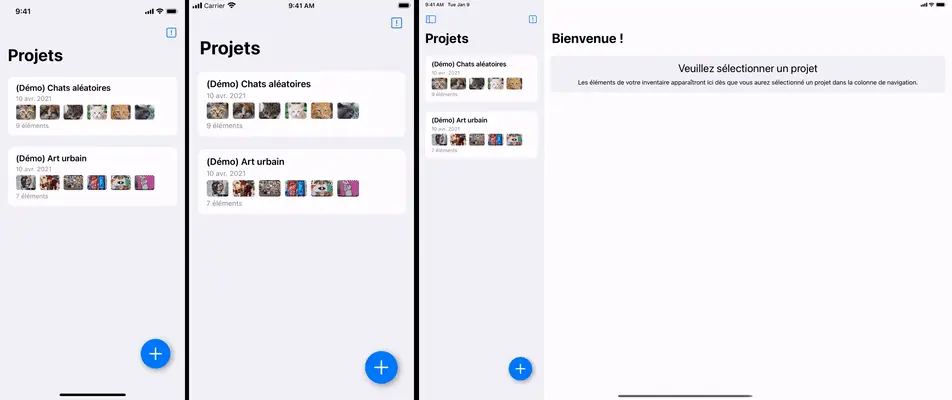

iPhone 12 Max Pro vs iPhone 8 Plus vs iPad

1. The tools to create App Store Previews

Let’s start with the prerequisites you need to have installed on your computer.

1.1 Fastlane

If you’re here, you probably know about fastlane. If not, just know it could save you a lot of time.

Using homebrew? brew install fastlane.

1.2 Ffmpeg

By developers for developers, ffmpeg is a well-known Swiss knife in video editing. Like imagemagick, ffmpeg is a key tool for developers, so I highly recommend you to learn the basics on how to use it (and why not, even teaching your designer friends).

Using homebrew? brew install ffmpeg.

2. How to record videos with UI Test and fastlane

Automation is neat, and fastlane makes things so much easier! However, it doesn’t natively handles video recording for now. There has been discussions about this subject several times, but it didn’t make it as a preinstalled lane, yet 🤞. The controversial point seems on how to make the simulator (running the test) communicate with the terminal (running fastlane) to start / end the video recording.

I eventually decided to use the implementation from Lausbert called Snaptake.

Is it your first time creating a UI test target for your Xcode project? If so, I recommend you to start with snapshots ; please read first the fastlane documentation which covers it well enough.

The code works like this:

- Fastlane will run your UI test like it would for the snapshots, and in this case record videos.

- The lane will listen to the fastlane cache directory in order to know when to start / end the video recording (thanks to simctl).

- As it seems an issue with simctl exists (black frames until the simulator becomes active), a time is saved within a temporary file so the video can be cleaned (trimmed) at the end.

- The UI tests will eventually end, and the video lane should alter the recorded videos to make them “App Store Connect” proof.

2.1 Import Snaptake logic

The Snaptake project is a (working) proof of concept. There is no third-party dependency to import. I highly suggest that you take a look at the (GitHub) “read me”, and then decide if it fits your need.

You don’t want to read? I put the most important code inside a class helper. So you can download it here. Add this class to your test target, or near the fastlane SnapshotHelper.swift file. We’ll use this class later.

2.2 How to customize Snapfile depending on the lane?

You may need to customize the Snapfile for recording the videos. For instance:

- Do we really need to open the snapshot summary at the end? No, as it’s entirely unrelated :

skip_open_summary(true). - Do we plan to support the same devices? If not, then we should update

devices. - And so on.

It’s not possible to specify a different Snapfile when calling the snapshot action. However, you can add some conditions in the file to override values for a specific lane!

Let’s say your new lane is called previews. Here would be the parameters in Snapfile to override the languages:

|

|

2.3 How to configure the Xcode project to target the video scenario?

If you plan to create a new target, just specify the related scheme in the Snapfile for the new lane (like we discussed above).

|

|

But if you want to reuse the existing target, a trick is possible!

- Duplicate the existing UI scheme (and name it for instance

MyProjectPreviews). - Specify this scheme in the Snapfile (as written above).

- Edit the newly duplicated scheme, select “Run” -> “Arguments”, and add an environment variable (named

SNAPSHOT_MODEwith valuevideo). - Add in your test target the following property:

|

|

- And finally, differentiate which code to run:

|

|

In my case, I reused the existing target, as it already contains a lot of helper methods to navigate through the app. The decision is entirely up to you, and will probably depend a lot on the project.

2.4 How to start / end the video recording?

Last step, you have to implement a scenario within your UI test. As for the snapshots, you can record multiple videos.

|

|

2.5 Create a new lane!

I recommend you to start with the code from Snaptake. You’ll very likely have to adapt it to your needs, please read further to get some tips!

Some notes:

- I store my previews inside

./fastlane/videosinstead of./fastlane/screenshots. - For now, I upload the video files manually. There is undocumented API in fastlane, but I didn’t get the faith to implement it.

- The video duration must be between 15s and 30s. And it’s likely that there will be a +1/-1s variation between the recorded videos. I suggest you target for ~25s!

If you want to see the lane of my Fastfile, check this file.

After that, just run fastlane previews.

3. How to convert the Previews to fit App Store Connect guidelines

This section covers the different post-productions filters that you may want to apply to the recorded videos (leading to “preview” files).

- It alters the video after being recorded by fastlane.

- It uses ffmpeg as a command-line tool.

Check the end of this section to see the final ffmpeg command. You’ll be able to find the Fastfile example here.

And in case you’re looking for the official guidelines: https://help.apple.com/app-store-connect/?lang=en/#/dev4e413fcb8.

3.1 Audio track

Apple doesn’t accept video without an audio channel. However, does your app really need audio for its Preview? Maybe not. Plus, you should take in consideration if it’ll fit your user taste.

A safe bet (which seems ok until now), is to add a “silent audio” track over your video, in order to pass the automatic checks by Apple. Several apps are using this trick, so it should be safe to use, for now!

|

|

-f lavfiis equivalent to-filter_complex, allowing arbitrary number of inputs.-i anullsrc=channel_layout=stereospecifies the silent audio (input).-shortestspecifies to takes the shortest duration (here our video) for the output.

Worst case, you could find a nice track on internet, and overlay it on each video.

3.2 Trimming, … too much?

As explained early in this article, the video must be trimmed because simctl won’t start recording directly.

However, you may encounter another issue when trimming with ffmpeg, especially if your video repeats the same frame at the beginning. For instance, if you start the video with a 1s pause for the sake of the video experience. The “bug” is that all the same frame will be removed from the video, then removing the “delay”.

From what I could gather, it seems considered as the normal behavior by ffmpeg. The 1 frame (which lasts 1s+) is encoded in a specific way to reduce the file size (compression). And when you trim the video, the whole frame disappears (removing its whole duration with it).

To avoid that, it seems you need to either 1/ re-encode the video into a “less compressed” format, or 2/ apply a filter leading to a similar effect.

I chose solution 2/, simply by adding a “silent audio” track over the video (that we covered just before). And keep in mind that it seems you can’t combine the two operations in one ffmpeg command. But that’s probably my limits in ffmpeg!

A simple trimming would look like that:

|

|

-ss '00:00:02.100'specifies the trimming duration. Here, we trim the first 2.1s.

However, if you directly apply this trimming to your video with a large delay at the beginning, you’ll likely get an over-trimmed video (as stated above).

So in our case, we’ll apply the silent audio before, and not in one but two commands:

|

|

-movflags frag_keyframe+empty_moovavoids the error “ffmpeg muxer does not support non seekable output”, learn more here.-f mp4specifies the ouput format (as we don’t have the./ouput.mp4in the first part).- |redirects to/dev/stdinand chains with another command.-i '/dev/stdin'gets the first video.

And the other commands have already been explained.

I didn’t find a better way to do all in one without encountering the trimming issue. But feel free to reach out if you have an idea!

3.3 Device orientation and screen size

You support iPad? And your video is in landscape?

When you set device orientation (e.g. XCUIDevice.shared.orientation = .landscapeLeft), it doesn’t really rotate the device like you would when playing with the simulator. The video will be in landscape, but rotated like it was recorded in portrait mode. Just make sure to rotate the video before resizing it, or take this into account when deciding the new size.

In the Fastfile, I added a simple check like this:

|

|

transpose=2equals to “rotate 90° clockwise”.- the

,is a separation for other filters.

And as for the screen size, probably the most frustrating, but the size of your video won’t have the same aspect ratio, and may be either downscaled (loosing quality), or even upscaled (WTF??).

Either way, you’ll have to map the device model with the proper new dimension (and it’ll change every year, or with new Xcode versions).

Here is a map example of “well-known devices => accepted dimension”:

|

|

Then you create one ffmpeg command for both the rotation and the scaling:

|

|

setsar=1resets the SAR to 1, so that the display resolution is the same as the stored resolution (says our best friend).

3.4 Video encoding

Last but not least, your video needs to be encoded into a specific way. While you probably can apply these parameters in each previous step, I decided to apply all of them at the end in order to make sure none has been overridden (but is it the best way? not sure).

For this step, I mostly reused the command line from Snaptake!

|

|

3.5 All in one!

Here is a part of my Fastfile with everything combined:

|

|

3.6 Final tips

- Don’t make all videos before at least testing uploading some of them to Apple. If a problem is detected, you won’t have to re-run your script several times. Test with at least an iPhone and an iPad screenshot (when applicable).

- Don’t try to make your code too generic, and comment as much as possible the non-obvious commands.

4. Final thoughts: Apple and the Previews

It seems Apple isn’t helping much the community to create automated previews. + It probably hopes that the community (e.g. fastlane) will achieve it sometimes, so it can focus on more “core” problems.

- Or that the developer will produce a more creative video (as long as it respects the guidelines, meaning it should display content available in the app).

However, providing help on this matter could dramatically improve App Store experience for users. Not sure about what the app does and if it’s worth your time? Just check this 30s video! (And then, maybe, don’t download the app).

But in perspective, it mostly matters if your app is top-ranked or chosen by the editorial team, which concerns a very small portion of lucky developers. Or is it really helping for the “ASO”? We’ll see :-)

Author Vinzius

LastMod 12 April, 2021